Add Real AI to Your Lovable Project with One Prompt

When Lovable Isn’t Enough

If you’ve spent time building in Lovable, you know how fast it is to get a good-looking UI up and running. But once you try to add real AI agents, or complex logic, things start to get tricky. The platform was built for speed — not depth.

This tutorial introduces a workaround: a prompt generator that links your Lovable frontend to a real AI agent you’ve built and tested visually in Momen. It doesn’t require writing code, and gives you more control.

What’s Momen?

Momen is the no‑code full‑stack web app builder that powers this tool. It handles the parts Lovable doesn’t focus on — things like AI agents, backend workflows, and databases. You can visually create and test your AI agent in Momen, confirm it works, and then use the prompt generator to connect it back to Lovable with a single prompt.

If you’ve hit the wall trying to add real agentic AI to your Lovable projects, this tool is a way forward.

What You'll Learn

How to visually build a real AI agent in Momen

How to generate a Lovable-compatible prompt using your agent config

How to plug that prompt into Lovable to create a fully working AI app

What You'll Need

A Lovable account ready

A Momen account (free plan works)

A clear idea of what you want your AI to do

4 Steps to Build an AI App in Lovable

Before we dive in, here's the basic idea:

Momen powers your backend AI agent, and this tool helps you generate a single prompt that tells Lovable how to connect to it.

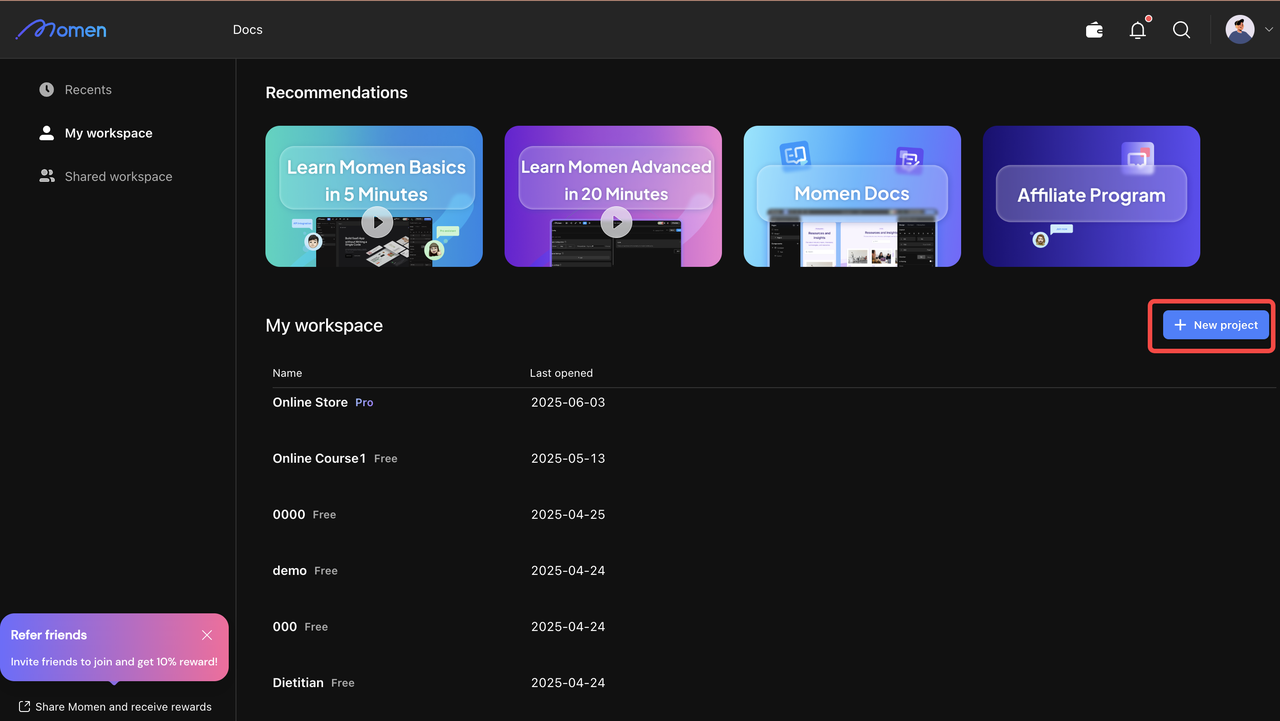

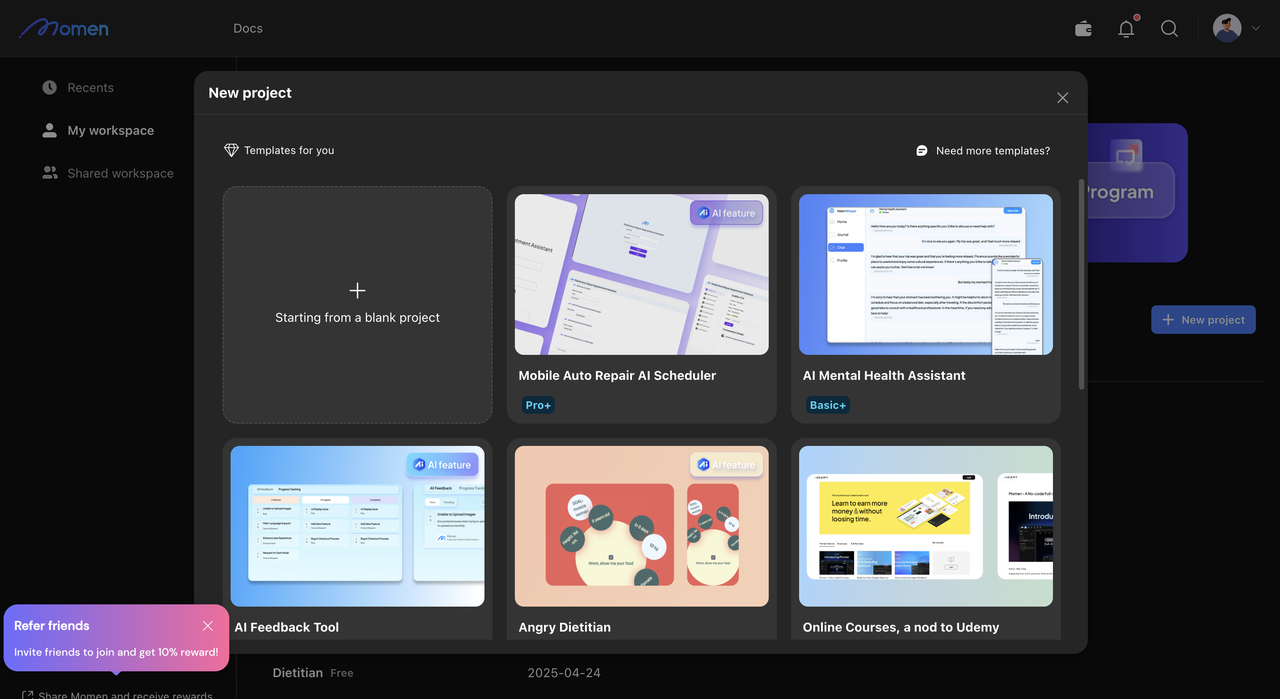

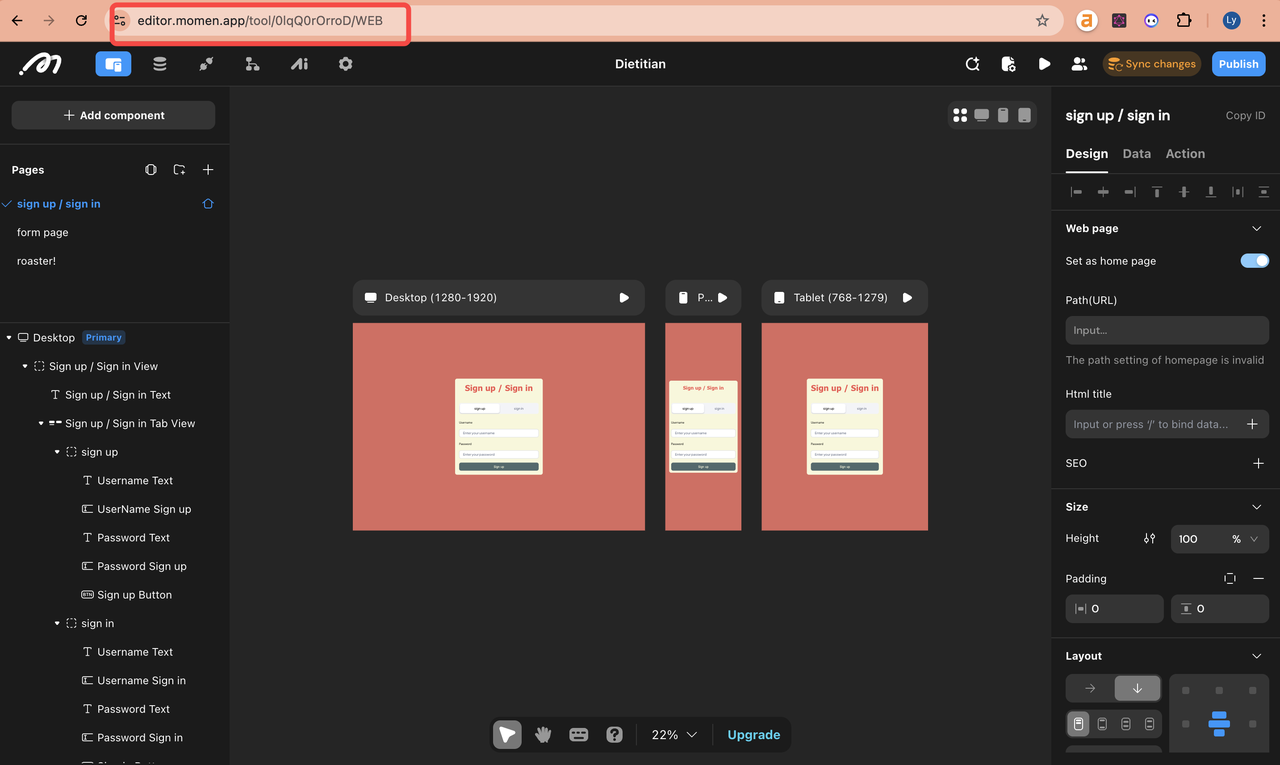

Step 1: Create Your Momen Project

Sign up at momen.app and create a new project.

You can either start from scratch or choose a template with AI agents already configured.

Step 2: Build Your AI Agents in Momen

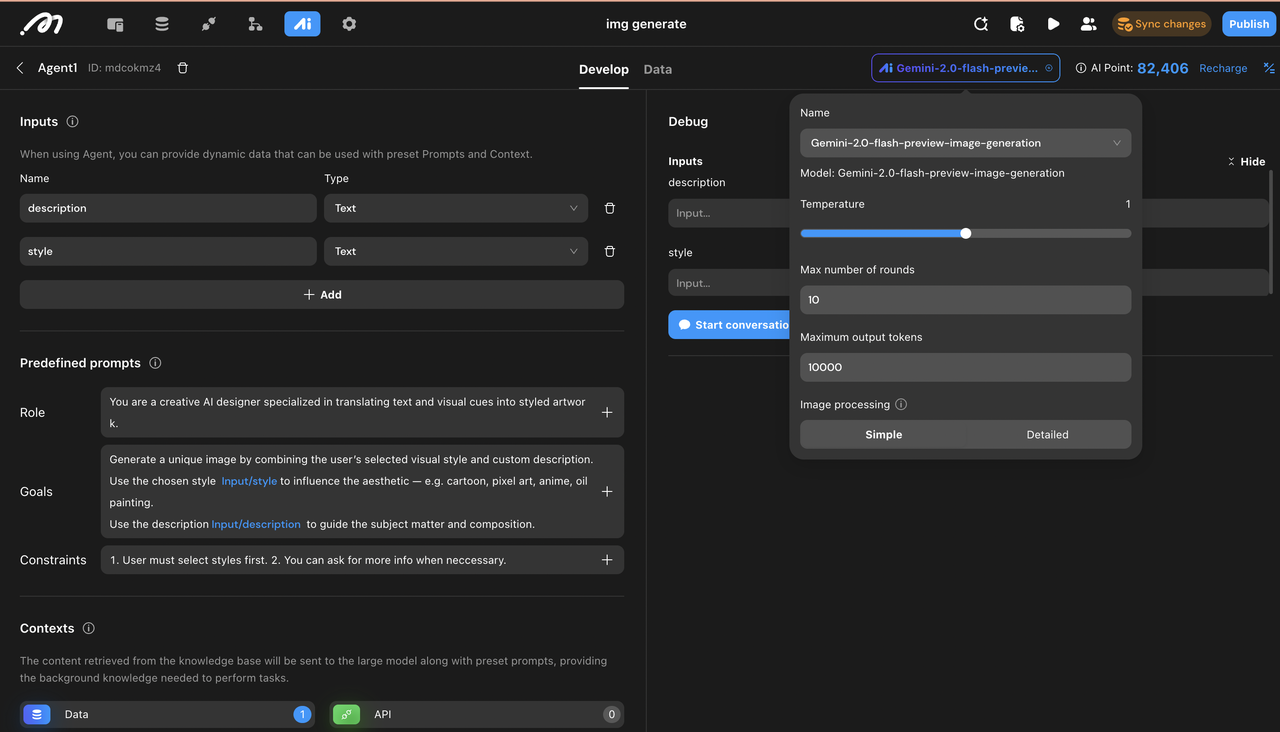

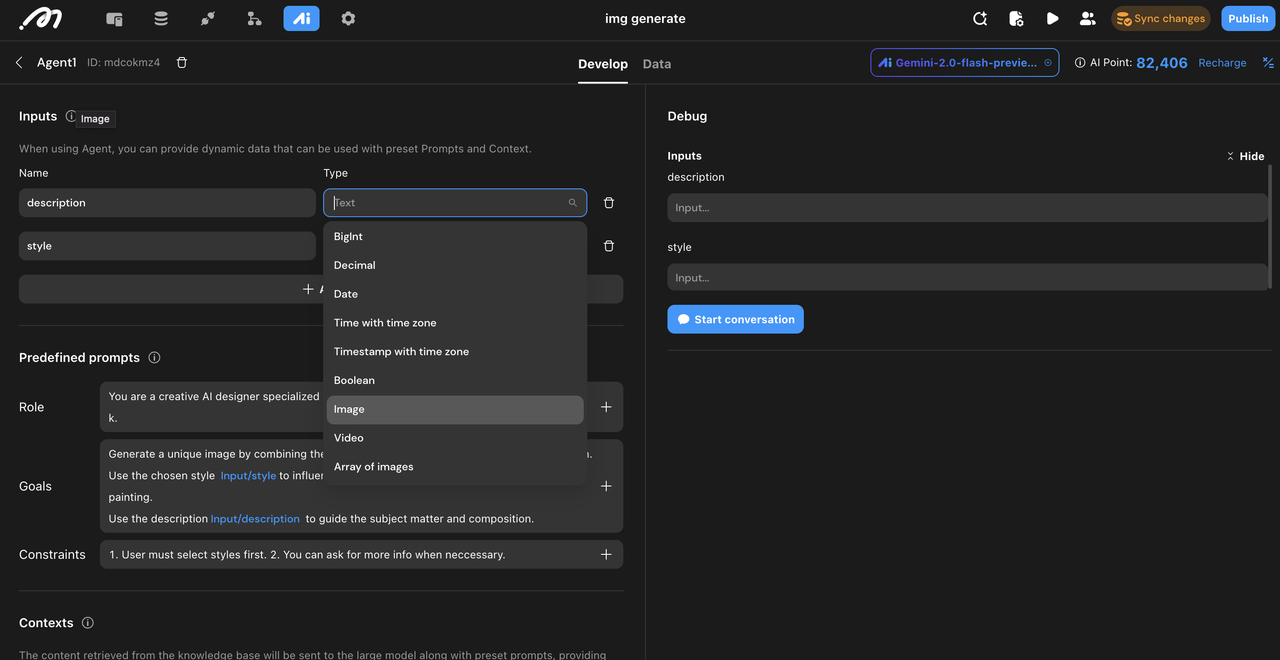

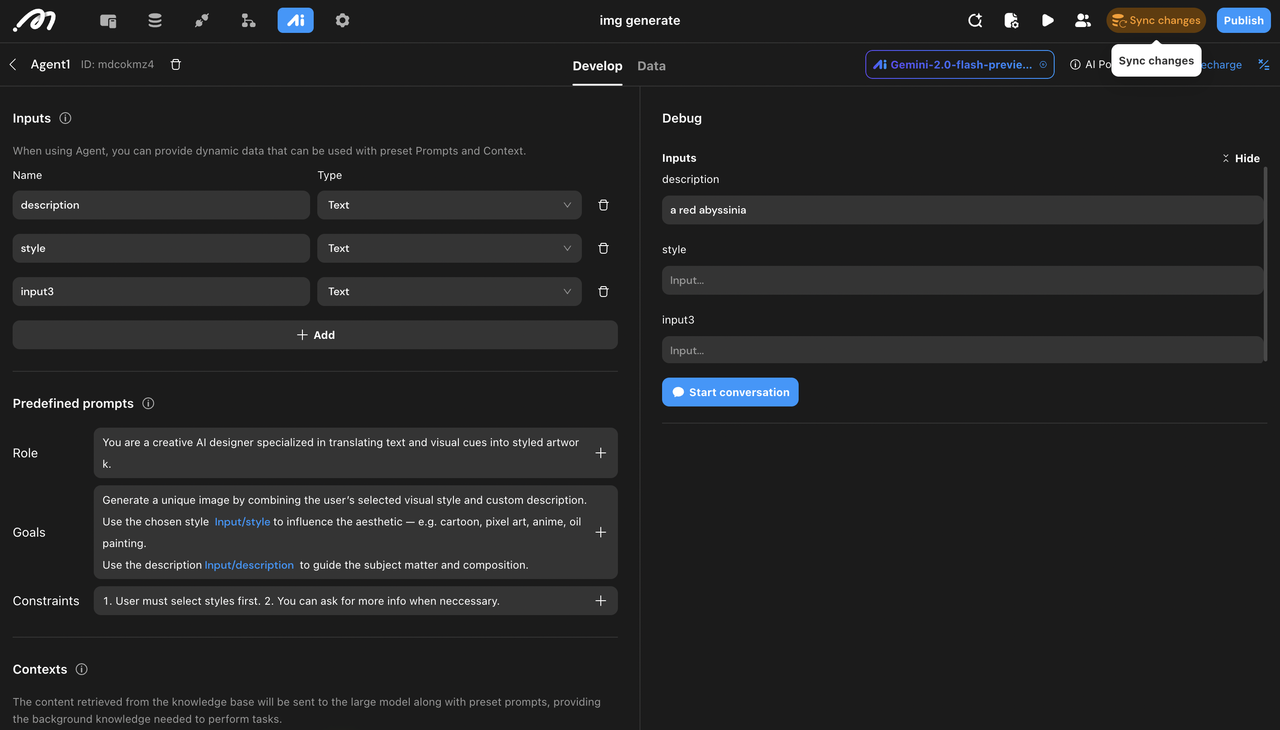

Click the "AI" button on the top bar to open the agent panel. Then click “Add agent” to start building.

You’ll see several sections in the agent builder:

General Configuration

Choose your preferred LLM (GPT-4o, Gemini 2.5, etc.), and optionally adjust settings like temperature (personality), output length, and conversation rounds.

Input

In the input section, Define what the user needs to provide — e.g., description, style, etc.. These are dynamic fields that user fills in.

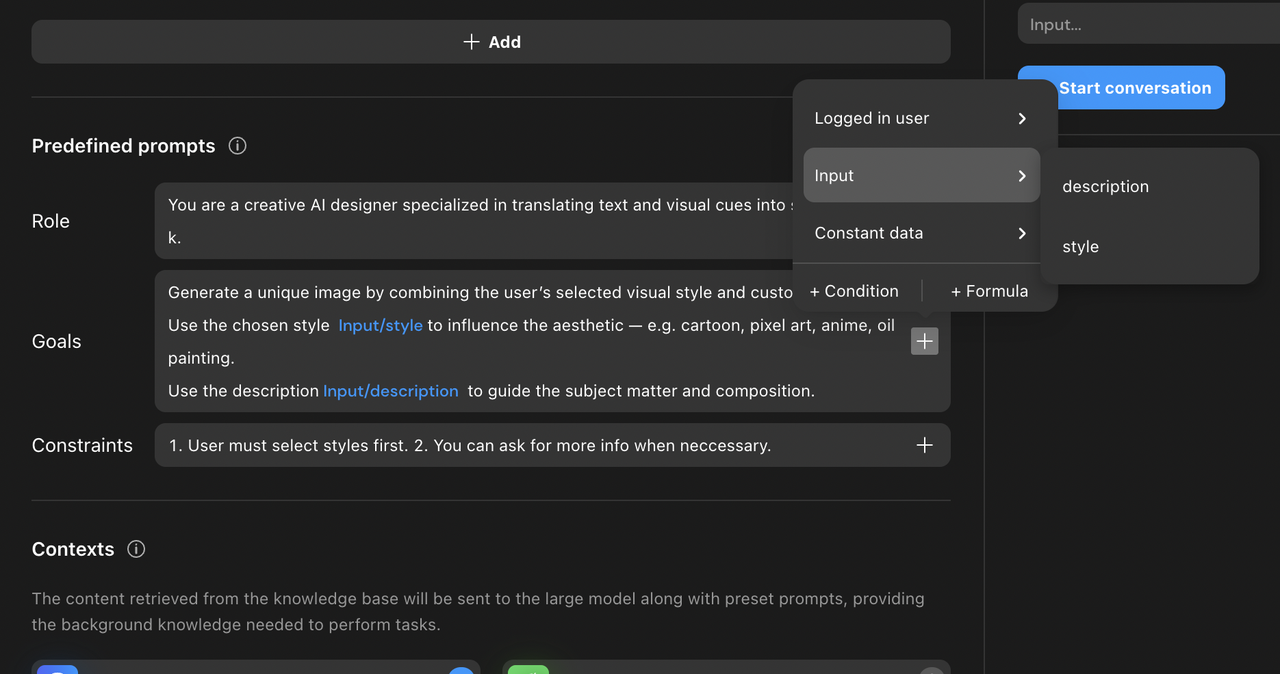

Prompt

Set the agent’s Role (what it acts as) and Goal (what it needs to do). Example: “You are a helpful assistant that summarizes user input.” You can also add Constraints like “Use markdown only” or “Don’t include sources.”

Use + to reference inputs, user info, constants, or formulas in the prompt.

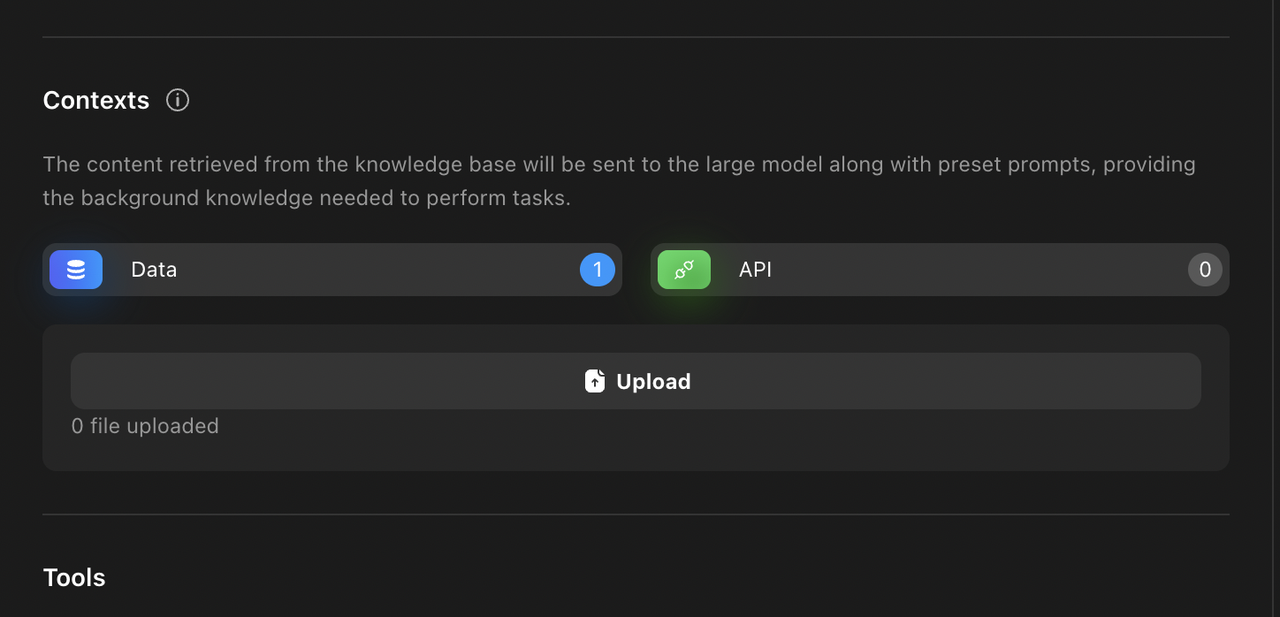

Context

Want your agent to access knowledge? Add data from database tables or connect APIs. APIs can even link your agent to external services.

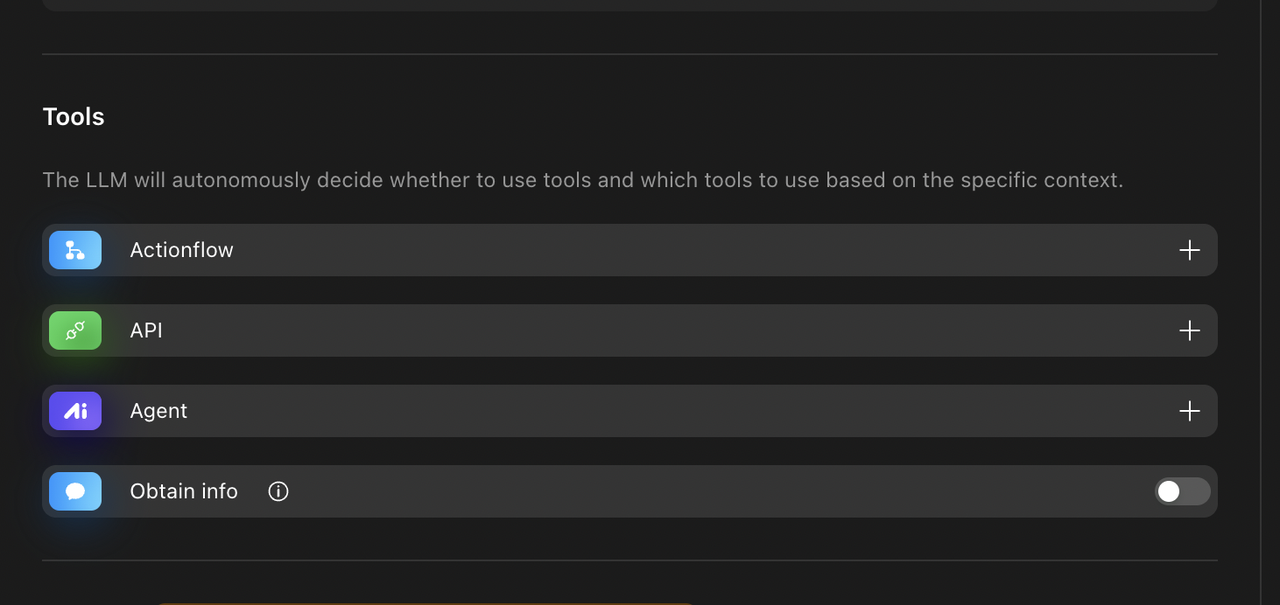

Tools (Advanced)

Configure backend workflows (Actionflow), trigger APIs, or invoke other agents. This is how you give your agent real functionality beyond LLM text.

Output

In the Output section, you can configure how the AI response is delivered:

Default Output: The model will respond freely in plain text. You can also enable Streaming, which shows the response as it’s being generated — a smoother UX, especially for chat-based interactions.

Structured Output: If your app needs structured data (a JSON object), you can define the expected format here. This is helpful when your Lovable UI relies on specific fields.

Note: Gemini 2.0 Flash (used for image generation) does not support structured outputs.

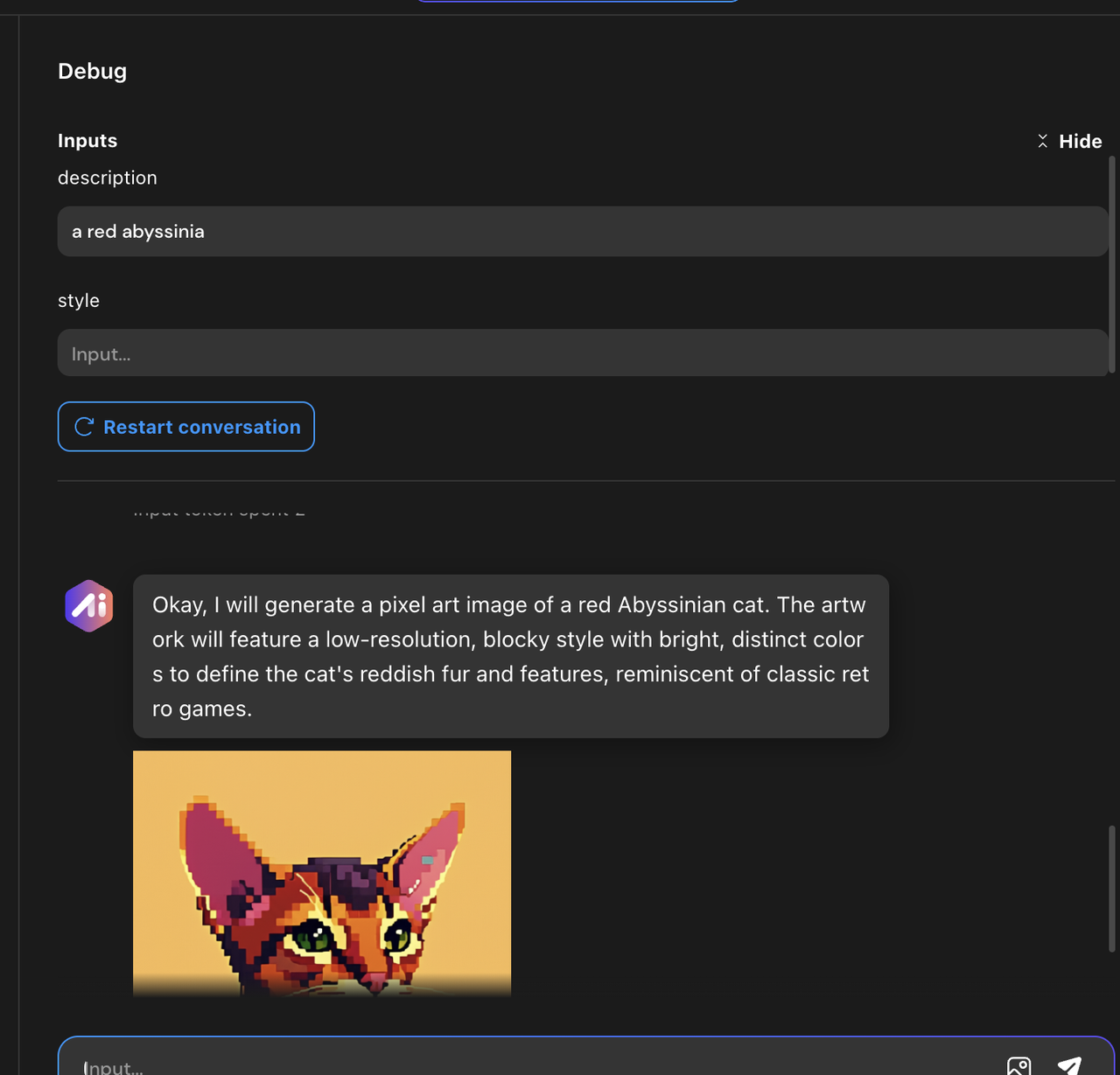

Debug

On the right, test your agent and inspect each round: input, output, tool usage, and system messages.

Once done, click “Sync” to save your configuration.

Once you configured your agent, click the sync button to save your configurations.

💡 Pro Tip: Test your agent first in Momen’s debug panel — if it works here, it’ll work in Lovable.

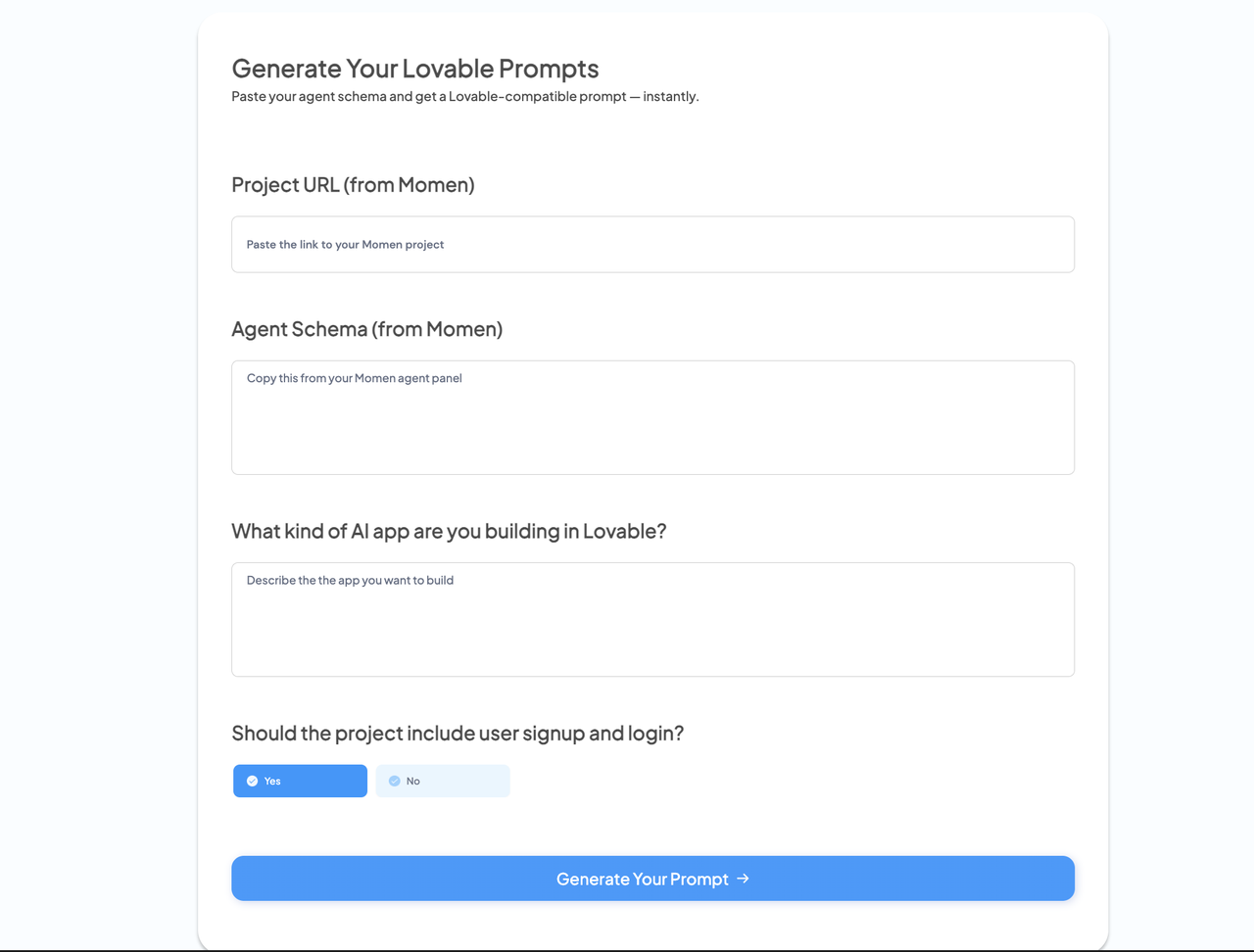

Step 3: Generate Your Prompt

Copy your project URL from your browser.

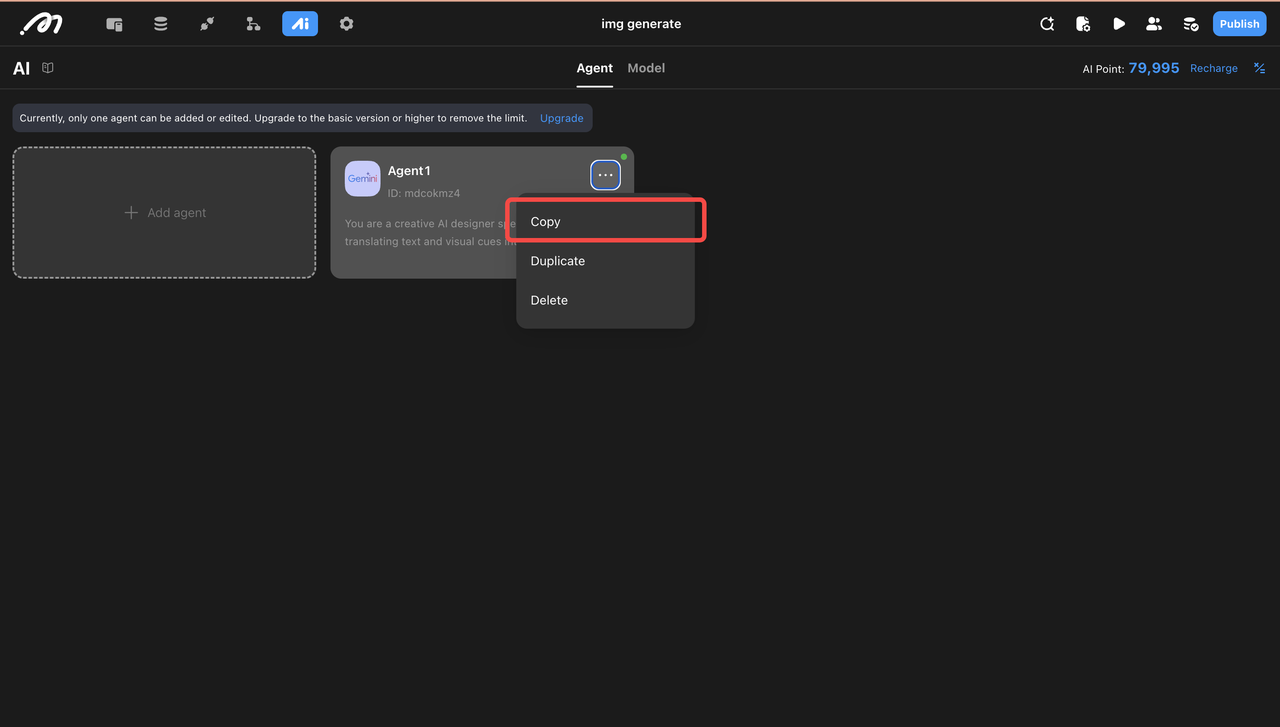

In the agent panel, click the

⋯menu and select "Copy". This includes your agent setup.

Open the Lovable prompt generator.

Paste in:

Project URL

Agent Schema

Describe your app

Choose whether you need authentication

Click Generate — and your Lovable-compatible prompt will appear.

Step 4: Paste the Prompt in Lovable

In your Lovable project, paste the generated prompt.

Wait 1–5 minutes — Lovable will start generating your AI app powered by the Momen agent.

That’s it. You’ve now got a frontend built with Lovable, and real AI logic powered by Momen — all with one prompt.

FAQs

What exactly is the “agent schema”? Where do I find it?

The agent schema is just a copy of your agent’s “setup” — everything you configured in Momen (the model, prompts, tools, etc.) in one package. How to find it: Go to your agent panel in Momen, click the three-dot menu (⋯) in the top right, and select “Copy”

What happens if I paste the wrong schema or URL?

Our tool will immediately show an error message if something’s off — for example, if the URL isn’t a Momen project link or the schema doesn’t match the format.

You won’t waste time generating a broken prompt. Just fix the input (copy the correct project URL or schema) and try again.

Can I add multiple agents to the same Lovable project using this tool?

Not directly — right now, the tool only links one agent to a Lovable project at a time.

However, inside Momen’s AI Agent Builder, you can chain multiple agents together. That means you can design several agents to work as a team (e.g., one for research, one for summarizing, one for writing) and then connect that combined agent workflow to Lovable with a single prompt.

Is Momen free to use? Are there any limits?

Yes — you can start for free. The free plan gives you 100k AI Points for building AI agents.

Do I need to know what an “Actionflow” is to build an agent?

No. Actionflows are optional. You can create a fully working agent without ever touching them. But if you want your AI to trigger multi-step workflows (like “send email → update database → call API”), that’s when Actionflows shine.

Can I test/debug my agent before connecting it to Lovable?

Yes — right inside Momen. There’s a built-in debug panel where you can test the agent, see every step (inputs, outputs, tool calls), and fix issues before touching Lovable.

What models does Momen support? Can I choose between GPT and Gemini?

Yes. You can pick from:

OpenAI models (GPT-4o mini, GOT-4o, GPT-4.1, GPT-4.1 mini, GPT-4.1 nano GPT-o3 mini, GPT-o4 mini)

Gemini models (2.5 Pro, 2.5 Flash, 2.0 Flash for images)

Does the AI automatically work in Lovable once I paste the prompt?

Yes. The prompt tells Lovable exactly how to connect to your Momen agent.

What do I do if Lovable gives an error after pasting the prompt?

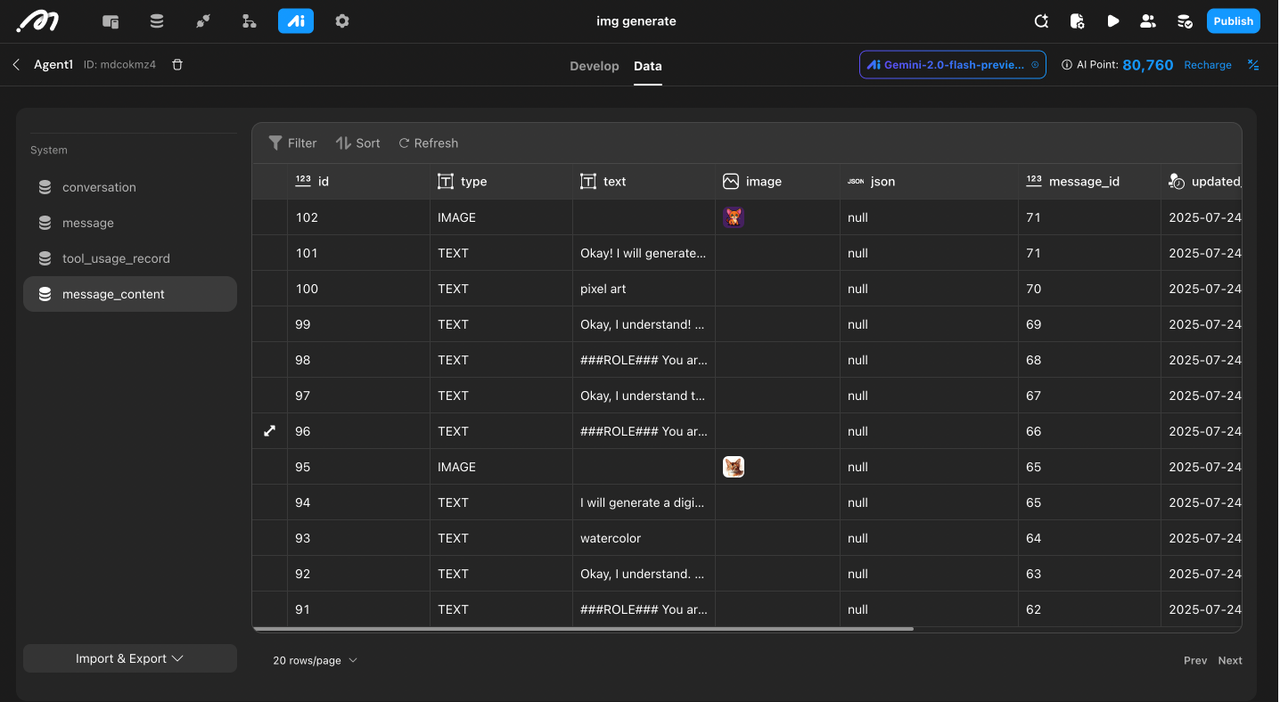

Check the "data" in your Momen AI agent panel, you should see the information of every round of the conversation.

If things are alright in Momen, you should try to debug in Lovable.

How do I know if the agent is actually responding inside Lovable?

If your Lovable app feels unresponsive or you’re not sure the AI is doing anything, you can confirm directly in Momen’s agent logs:

Open your Momen project's agent panel → Data tab.

Here you’ll see every request your agent received from Lovable, along with the response status and details.

Resources & Next Steps

🔗 Learn more about building AI agents in Momen → Momen AI Docs

🔗 See how to build workflows → Actionflow Guide

🔗 See how to connect with APIs → API Guide

🔗 Explore the Momen Community Forum → forum.momen.app

🎬 Subscribe to Momen's Youtube channel → https://www.youtube.com/@Momen_HQ